What makes a successful TV show?

This analysis and model attemts to determine the factors that influence high or low IMDb ratings for TV shows. All generes are examined, and while most originate in the United States, there are a few from the UK and elsewhere included.

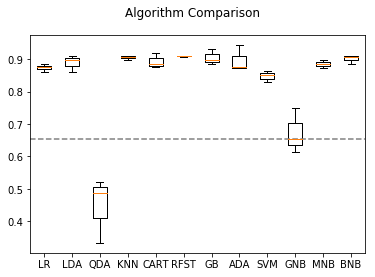

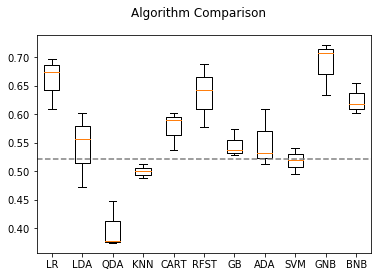

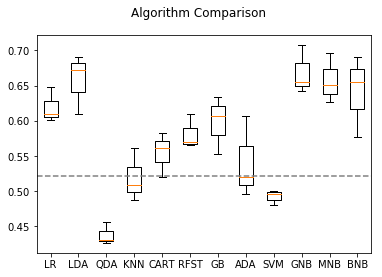

Two separate models are developed. In both, the top and bottom rated shows are classified as winners and losers, respectively, and an array of 12 classisfiers are applied using cross validation to identify the best performing model. 25% of the data was reserved as a test set, and cross-validation scores and test set scores are both shown in the tables below. Baseline score is 0.52.

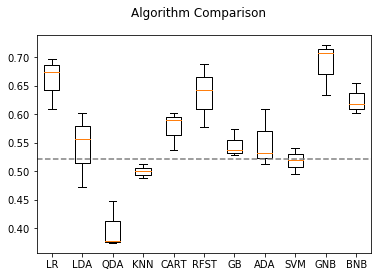

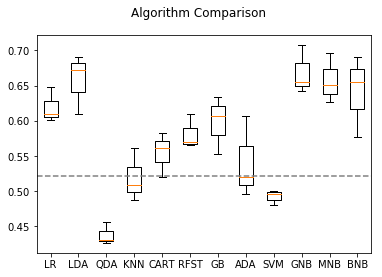

The first utilizes natural language processing (NLP) on the IMDb summary descriptions of each show. Term Frequency - Inverse Document Frequency and Count Vectorization were used on n-grams of size 2-4 were used. With both vectorization techniqes, Random Forrest and Naive Bayesian classifiers were most successful, with the highest score of 0.642 was achieved using TF-IDF vectorization and a Multinomial Naive Bayes classifier. The n-grams with highest cumulative score are identified as the most significant factors in the model, giving a clue as to the words in a summary description that foretell a show’s likelihood of being a winner or loser.

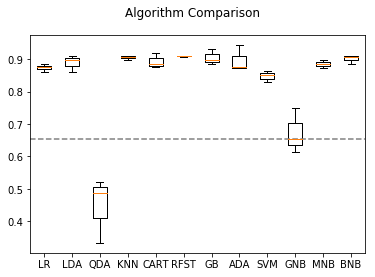

A second model, using factors such as genre, lenght, schedule times, network and format was also built, and the same set of 12 classifiers was applied. The ADA Boost classifier achieved the top score of 0.92 using these factors.

Data collection and cleanup was tedious, and involved multiple runs of webscraping IMDb pages on show ratings, then using the TVmaze API to return show detals. Unless interested in these details, the reader is encouraged to skip to the section titled “Modeling Section” a bit more than halfway through this notebook.

Results Summary:

From the NLP models, it seems shows featuring adult characters in crime and drama series set in times before or after the present in New York will fare better than reality or animated series featuring children or teens and highlighting pop culture.

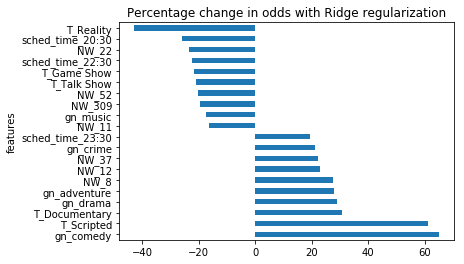

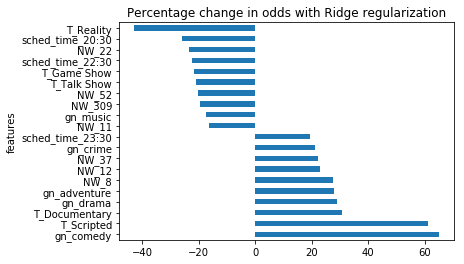

The model on the factors other than the summary showed similar tendencies. Realty formats were the strongest negative factor in predicting success, while the scripted format was the strongest positive predictor. Game and Talk shows were negative, while crime, science fiction, comedy, drama and documentaries were positive predictors. Shows aired by HBO and BBC predicted success, while the lower rated shows were found more predominantly on MTV, E!, Comedy Central and Lifetime.

Though interesting associations have been found, it must be said that nothing in the techniques used here can be interpreted as causality. For example, it cannot be said that reality shows featuring teenagers will always flop. This report is based on initial efforts to determine factors that may influence a show’s success, and have shown a path for future more detailed modeling. Suggested future paths include textual analysis of critics’ reviews, analysis based on cast or producers, analysis of differences in rating based on audience demographics, and a more detailed look at the connection between genre and the type/style of show.

# Import libraries needed for scraping and saving results.

# Additional libraries needed for modeling, analysis and display will be imported when needed.

import requests

import pandas as pd

from bs4 import BeautifulSoup

import pickle

Data Acquisition: List of top rated TV Shows

# Retrieve current top 250 TV shows webpage

url = "http://www.imdb.com/chart/toptv/"

r = requests.get(url)

html = r.text

html[0:200]

u'\n\n\n\n<!DOCTYPE html>\n<html\nxmlns:og="http://ogp.me/ns#"\nxmlns:fb="http://www.facebook.com/2008/fbml">\n <head>\n <meta charset="utf-8">\n <meta http-equiv="X-UA-Compatible" content="IE=ed'

# Use Beautiful soup to extract the imdb numbers from the webpage

soup = BeautifulSoup(html, "lxml")

# Scrape the IMDb numbers for the 250 top rated shows

show_list = []

for tbody in soup.findAll('tbody', class_='lister-list'):

for title in tbody.findAll('td', class_='titleColumn'):

show_list.append(str(title.findAll('a')).split("/")[2])

show_list

['tt5491994',

'tt0185906',

'tt0795176',

'tt0944947',

'tt0903747',

'tt0306414',

'tt2861424',

'tt2395695',

'tt0081846',

'tt0071075',

'tt0141842',

'tt1475582',

'tt1533395',

'tt0417299',

'tt0098769',

'tt1806234',

'tt0303461',

'tt0092337',

'tt0052520',

'tt3530232',

'tt2356777',

'tt1355642',

'tt2802850',

'tt0103359',

'tt0296310',

'tt0877057',

'tt4508902',

'tt0475784',

'tt2092588',

'tt0213338',

'tt1856010',

'tt0063929',

'tt0112130',

'tt2571774',

'tt0081834',

'tt0367279',

'tt4742876',

'tt4574334',

'tt2085059',

'tt0108778',

'tt0098904',

'tt3718778',

'tt0081912',

'tt0098936',

'tt1518542',

'tt0074006',

'tt2707408',

'tt0193676',

'tt1865718',

'tt0096548',

'tt0072500',

'tt0384766',

'tt0118421',

'tt0096697',

'tt0090509',

'tt0121955',

'tt0386676',

'tt4299972',

'tt2560140',

'tt0472954',

'tt0412142',

'tt0214341',

'tt5555260',

'tt2442560',

'tt5712554',

'tt0200276',

'tt0353049',

'tt1910272',

'tt0086661',

'tt0248654',

'tt5189670',

'tt0121220',

'tt1486217',

'tt0096639',

'tt0120570',

'tt4786824',

'tt1628033',

'tt0348914',

'tt0403778',

'tt5288312',

'tt0459159',

'tt3032476',

'tt0407362',

'tt4093826',

'tt0773262',

'tt0417349',

'tt3322312',

'tt0264235',

'tt0106179',

'tt0286486',

'tt2297757',

'tt0088484',

'tt2098220',

'tt5425186',

'tt0318871',

'tt0094517',

'tt0436992',

'tt1586680',

'tt0092324',

'tt0994314',

'tt0203082',

'tt1606375',

'tt0380136',

'tt0187664',

'tt1513168',

'tt0118273',

'tt0421357',

'tt1641384',

'tt0314979',

'tt5834204',

'tt0092455',

'tt0115147',

'tt4295140',

'tt0080306',

'tt1266020',

'tt1831164',

'tt3920596',

'tt0804503',

'tt1492966',

'tt0053488',

'tt0086831',

'tt0758745',

'tt0995832',

'tt0434706',

'tt2401256',

'tt0423731',

'tt0111958',

'tt0863046',

'tt1733785',

'tt2049116',

'tt0275137',

'tt1305826',

'tt0472027',

'tt2100976',

'tt1489428',

'tt0112159',

'tt4158110',

'tt1227926',

'tt1870479',

'tt0979432',

'tt0106028',

'tt0387764',

'tt0237123',

'tt0047708',

'tt0088509',

'tt0290978',

'tt1984119',

'tt0098825',

'tt2306299',

'tt0280249',

'tt3647998',

'tt0094525',

'tt0163507',

'tt0118266',

'tt0182629',

'tt0080297',

'tt0061287',

'tt1758429',

'tt3671754',

'tt0487831',

'tt0388629',

'tt2575988',

'tt4189022',

'tt0458254',

'tt2788432',

'tt0096657',

'tt0346314',

'tt1474684',

'tt4288182',

'tt0417373',

'tt1298820',

'tt0262150',

'tt1695360',

'tt1230180',

'tt2243973',

'tt0129690',

'tt1632701',

'tt2433738',

'tt0149460',

'tt1124373',

'tt0075520',

'tt1795096',

'tt1442449',

'tt5249462',

'tt2937900',

'tt1439629',

'tt5071412',

'tt0397150',

'tt0083466',

'tt2701582',

'tt5114356',

'tt4156586',

'tt0319969',

'tt0103584',

'tt0302199',

'tt0070644',

'tt1883092',

'tt2311418',

'tt3428912',

'tt1442437',

'tt0362192',

'tt0278238',

'tt0387199',

'tt2384811',

'tt0098833',

'tt0074028',

'tt2303687',

'tt0807832',

'tt0056751',

'tt0173528',

'tt3358020',

'tt0103466',

'tt1526318',

'tt0185133',

'tt0075572',

'tt0112084',

'tt1837492',

'tt2919910',

'tt1299368',

'tt0094535',

'tt1520211',

'tt0108906',

'tt0988824',

'tt5421602',

'tt5853176',

'tt0934320',

'tt0337898',

'tt0495212',

'tt0460681',

'tt2407574',

'tt0290988',

'tt1598754',

'tt1119644',

'tt1220617',

'tt3398228',

'tt0411008',

'tt0163503',

'tt2249364',

'tt1409055',

'tt4270492',

'tt0060028',

'tt0118480',

'tt0925266',

'tt3012698',

'tt0402711',

'tt0068098',

'tt0442632',

'tt1839578',

'tt0043208',

'tt5673782']

# This code has been executed, and the results pickled and stored locally, so no need to run these requests

# to the API again. The api address with key to look up show with imdb number is

# http://api.tvmaze.com/lookup/shows?imdb=<show imdb identifier>

DO_NOT_RUN = True # Do not run when notebook is loaded to avoid unnecessary calls to the API

if not DO_NOT_RUN:

shows = pd.DataFrame()

for show_id in show_list:

try:

print show_id

# Get the tv show info from the api

url = "http://api.tvmaze.com/lookup/shows?imdb=" + show_id

r = requests.get(url)

# convert the return data to a dictionary

json_data = r.json()

# load a temp datafram with the dictionary, then append to the composite dataframe

temp_df = pd.DataFrame.from_dict(json_data, orient='index', dtype=None)

ttemp_df = temp_df.T # Was not able to load json in column orientation, so must transpose

shows = shows.append(ttemp_df, ignore_index=True)

except:

print show_id, " could not be retrieved from api"

shows.head()

# write the contents of an object to a file for later retrieval

DO_NOT_RUN = True # Be sure to check the file name to write before enabling execution on this block

if not DO_NOT_RUN:

pickle.dump( shows, open( "save_shows_df.p", "wb" ) )

Get list of bottom rated TV Series

# This code block was changed multiple times to pull html with different sets of low rated shows

# ultimately about 1200 imdb ids were scraped, and about 1/3 of those could be pulled from the TV Maze API.

url ="http://www.imdb.com/search/title?count=600&languages=en&title_type=tv_series&user_rating=3.4,5.0&sort=user_rating,asc"

r = requests.get(url)

html = r.text

html[0:200]

u'\n\n\n\n<!DOCTYPE html>\n<html\nxmlns:og="http://ogp.me/ns#"\nxmlns:fb="http://www.facebook.com/2008/fbml">\n <head>\n <meta charset="utf-8">\n <meta http-equiv="X-UA-Compatible" content="IE=ed'

# Use Beautiful soup to extract the imdb numbers from the webpage

soup = BeautifulSoup(html, "lxml")

loser_list = []

for div in soup.findAll('div', class_='lister-list'):

for h3 in div.findAll('h3', class_='lister-item-header'):

loser_list.append(str(h3.findAll('a')).split("/")[2])

loser_list

['tt0773264',

'tt1798695',

'tt1307083',

'tt4845734',

'tt0046641',

'tt1519575',

'tt0853078',

'tt0118423',

'tt0284767',

'tt4052124',

'tt0878801',

'tt3703500',

'tt1105170',

'tt4363582',

'tt3155428',

'tt0362350',

'tt0287196',

'tt2766052',

'tt0405545',

'tt0262975',

'tt0367278',

'tt7134262',

'tt1695352',

'tt0421470',

'tt2466890',

'tt0343305',

'tt1002739',

'tt1615697',

'tt0274262',

'tt0465320',

'tt1388381',

'tt0358889',

'tt1085789',

'tt1011591',

'tt0364804',

'tt1489335',

'tt3612584',

'tt0363377',

'tt0111930',

'tt0401913',

'tt0808086',

'tt0309212',

'tt5464192',

'tt0080250',

'tt4533338',

'tt4741696',

'tt1922810',

'tt1793868',

'tt4789316',

'tt0185054',

'tt1079622',

'tt1786048',

'tt0790508',

'tt1716372',

'tt0295098',

'tt3409706',

'tt0222574',

'tt2171325',

'tt0442643',

'tt2142117',

'tt0371433',

'tt0138244',

'tt1002010',

'tt0495557',

'tt1811817',

'tt5529996',

'tt1352053',

'tt0439346',

'tt0940147',

'tt3075138',

'tt1974439',

'tt2693842',

'tt0092325',

'tt6772826',

'tt1563069',

'tt0489598',

'tt0142055',

'tt1566154',

'tt0338592',

'tt0167515',

'tt2330327',

'tt1576464',

'tt2389845',

'tt0186747',

'tt0355096',

'tt1821877',

'tt0112033',

'tt1792654',

'tt0472243',

'tt6453018',

'tt3648886',

'tt1599374',

'tt2946482',

'tt4672020',

'tt1016283',

'tt2649480',

'tt1229945',

'tt2390606',

'tt1876612',

'tt0140732',

'tt1176156',

'tt0158522',

'tt4922726',

'tt0068104',

'tt2798842',

'tt1150627',

'tt1545453',

'tt3685566',

'tt0287223',

'tt4185510',

'tt0329912',

'tt0289808',

'tt0358849',

'tt2320439',

'tt0906840',

'tt0800281',

'tt1103082',

'tt2416362',

'tt3493906',

'tt0381827',

'tt0817553',

'tt0252172',

'tt0799872',

'tt0816224',

'tt1077162',

'tt1918005',

'tt1240983',

'tt1415000',

'tt5039916',

'tt0451467',

'tt0296438',

'tt1159990',

'tt0144701',

'tt4718304',

'tt1095213',

'tt1453090',

'tt0168372',

'tt0425725',

'tt3300126',

'tt1415098',

'tt5459976',

'tt4041694',

'tt2322264',

'tt1441005',

'tt1117549',

'tt0365991',

'tt0364807',

'tt1591375',

'tt3562462',

'tt6118186',

'tt3587176',

'tt1372127',

'tt0445865',

'tt2088493',

'tt4658248',

'tt0103444',

'tt4956964',

'tt1326185',

'tt0406422',

'tt1973659',

'tt1578933',

'tt0446621',

'tt1850624',

'tt0159177',

'tt0490539',

'tt0306398',

'tt0288922',

'tt0465336',

'tt0176397',

'tt1641939',

'tt0498879',

'tt0306296',

'tt1394277',

'tt0398416',

'tt2849552',

'tt1433566',

'tt0806893',

'tt3252890',

'tt3774098',

'tt0791275',

'tt5690224',

'tt0361181',

'tt0486953',

'tt1514319',

'tt3697290',

'tt1342752',

'tt0478936',

'tt0094448',

'tt0795101',

'tt1340759',

'tt0840061',

'tt1151434',

'tt0281429',

'tt0845745',

'tt2993514',

'tt0783634',

'tt1650352',

'tt1249256',

'tt2135766',

'tt3231114',

'tt1702421',

'tt2940494',

'tt6664486',

'tt0081857',

'tt1319598',

'tt0247094',

'tt6392176',

'tt0320969',

'tt2720144',

'tt0360266',

'tt2287380',

'tt1715368',

'tt0282291',

'tt2248736',

'tt2010634',

'tt1489432',

'tt4855578',

'tt1721484',

'tt0380850',

'tt3084090',

'tt2392683',

'tt1381004',

'tt1628058',

'tt2935638',

'tt1837169',

'tt2404111',

'tt2364381',

'tt0888095',

'tt2352123',

'tt1013862',

'tt4295320',

'tt1249227',

'tt1879603',

'tt0167566',

'tt0924528',

'tt0361144',

'tt0133300',

'tt5888698',

'tt1468817',

'tt4006060',

'tt0106096',

'tt0287243',

'tt1287376',

'tt0060032',

'tt1535270',

'tt4831262',

'tt0416397',

'tt1546138',

'tt2203971',

'tt0214353',

'tt0368518',

'tt0382506',

'tt5317980',

'tt2313839',

'tt1202295',

'tt4146118',

'tt1226448',

'tt0403748',

'tt0415448',

'tt4665932',

'tt3016956',

'tt1412249',

'tt1829773',

'tt0872053',

'tt0481443',

'tt0493098',

'tt0039120',

'tt1411598',

'tt0106123',

'tt1740718',

'tt0362153',

'tt1637756',

'tt0120974',

'tt2328067',

'tt0057741',

'tt1261356',

'tt2559390',

'tt0083433',

'tt0380934',

'tt4388486',

'tt0108821',

'tt0115338',

'tt0167735',

'tt0460630',

'tt2330453',

'tt0398429',

'tt0294140',

'tt0804423',

'tt2191952',

'tt1118131',

'tt4016700',

'tt5786580',

'tt0950199',

'tt1760165',

'tt4896654',

'tt0414719',

'tt1675974',

'tt0465343',

'tt1477137',

'tt0115171',

'tt3565412',

'tt0382458',

'tt0945153',

'tt0199278',

'tt1353293',

'tt1426343',

'tt2180165',

'tt5117094',

'tt1191039',

'tt0497857',

'tt0780409',

'tt2670950',

'tt1385183',

'tt3396736',

'tt2563482',

'tt4094138',

'tt0295065',

'tt1696268',

'tt0891053',

'tt0914267',

'tt1786018',

'tt1988479',

'tt1707814',

'tt1595853',

'tt2310444',

'tt5434894',

'tt0267216',

'tt0855313',

'tt1832828',

'tt0426685',

'tt2309561',

'tt2486556',

'tt0284786',

'tt3136814',

'tt1989818',

'tt1179310',

'tt0424748',

'tt1126298',

'tt0944946',

'tt1882639',

'tt0439904',

'tt0875887',

'tt1624991',

'tt2747670',

'tt2324247',

'tt0403810',

'tt1724452',

'tt2366252',

'tt3752894',

'tt0198211',

'tt1491318',

'tt1666205',

'tt2460474',

'tt0303435',

'tt0453329',

'tt0220938',

'tt0299264',

'tt0783341',

'tt0850175',

'tt1191056',

'tt0235917',

'tt0111892',

'tt0166442',

'tt2643770',

'tt5633924',

'tt0075485',

'tt0423657',

'tt5327970',

'tt3326032',

'tt5785658',

'tt2190731',

'tt0101041',

'tt3317020',

'tt4732076',

'tt2305717',

'tt3828162',

'tt0890935',

'tt0449460',

'tt0126175',

'tt3601886',

'tt5062878',

'tt1579911',

'tt0407354',

'tt6723012',

'tt5819414',

'tt4180738',

'tt0300802',

'tt2649738',

'tt3181412',

'tt0382400',

'tt3189040',

'tt0324919',

'tt2168240',

'tt2560966',

'tt0168373',

'tt0403824',

'tt0375440',

'tt3746054',

'tt2488150',

'tt4081326',

'tt5011838',

'tt2644204',

'tt1210781',

'tt0246359',

'tt0048898',

'tt3398108',

'tt5701572',

'tt0426827',

'tt0425714',

'tt1252620',

'tt0800289',

'tt0111991',

'tt0479847',

'tt2429392',

'tt2901828',

'tt4147072',

'tt1442411',

'tt2093677',

'tt0498421',

'tt3006666',

'tt3017190',

'tt0193680',

'tt5952954',

'tt0381759',

'tt2539740',

'tt0369176',

'tt3016990',

'tt0328787',

'tt2197994',

'tt0478753',

'tt4530152',

'tt0372643',

'tt5693024',

'tt0855669',

'tt1263594',

'tt5935350',

'tt1589855',

'tt0367444',

'tt3384116',

'tt3790338',

'tt2007260',

'tt0343300',

'tt0813904',

'tt0883849',

'tt0433296',

'tt1342705',

'tt0444988',

'tt1333495',

'tt0969661',

'tt0272967',

'tt0283184',

'tt0444577',

'tt3064496',

'tt0436996',

'tt1796788',

'tt1879997',

'tt4800624',

'tt0497079',

'tt1755893',

'tt0329824',

'tt2245937',

'tt2147632',

'tt3218114',

'tt1583417',

'tt0367403',

'tt1963853',

'tt4854900',

'tt6415490',

'tt1520150',

'tt0236907',

'tt6672370',

'tt1055136',

'tt5865052',

'tt1231448',

'tt6315022',

'tt4351710',

'tt4346344',

'tt6043450',

'tt0096605',

'tt1181712',

'tt0182623',

'tt0307719',

'tt1056344',

'tt0328795',

'tt0098916',

'tt1584617',

'tt2354136',

'tt4287478',

'tt0426347',

'tt1874006',

'tt2006560',

'tt1694893',

'tt2338766',

'tt0843808',

'tt0115155',

'tt4354068',

'tt1134663',

'tt0495787',

'tt0088539',

'tt5426274',

'tt1797127',

'tt5763656',

'tt0360301',

'tt4245504',

'tt0318214',

'tt0080254',

'tt1430135',

'tt0892562',

'tt2603010',

'tt1038918',

'tt0390746',

'tt3773682',

'tt0969372',

'tt1470839',

'tt1477822',

'tt1056446',

'tt0340474',

'tt5104198',

'tt2815184',

'tt0468998',

'tt0772146',

'tt3920816',

'tt3654000',

'tt1753229',

'tt0865687',

'tt0459631',

'tt1314665',

'tt4660152',

'tt0086685',

'tt0150323',

'tt0338576',

'tt2118185',

'tt0198086',

'tt0412184',

'tt4420148',

'tt0497853',

'tt1240534',

'tt2479832',

'tt0174195',

'tt1999642',

'tt1155579',

'tt1640376',

'tt1227586',

'tt3784176',

'tt1958848',

'tt2778982',

'tt1273636',

'tt0357357',

'tt1287301',

'tt0852784',

'tt0482432',

'tt1651941',

'tt0043235',

'tt2110603',

'tt1178184',

'tt0846757',

'tt0170959',

'tt0413617',

'tt1726890',

'tt0220874',

'tt0859872',

'tt4219276',

'tt0327268',

'tt0843319',

'tt3131346',

'tt0795072',

'tt5650560',

'tt0827847',

'tt1525767',

'tt1043913',

'tt0266179',

'tt0413558',

'tt0307714',

'tt4693416',

'tt0409619',

'tt5684430',

'tt0134269',

'tt5486088',

'tt1252370',

'tt6370626',

'tt3824018',

'tt2555880',

'tt3310544',

'tt2125758',

'tt1973047',

'tt6748366',

'tt0106113',

'tt0934701',

'tt2059031',

'tt0088598',

'tt1056536',

'tt1618950',

'tt6987940',

'tt5915978',

'tt0106008',

'tt0115206',

'tt0120992',

'tt4575056',

'tt2889104',

'tt0428169']

# first_loser_list = loser_list

# This code has been executed, and the results pickled and stored locally, so no need to run these requests

# to the API again

DO_NOT_RUN = True

if not DO_NOT_RUN:

losers = pd.DataFrame()

for loser_id in loser_list:

try:

print loser_id

# Get the tv show info from the api

url = "http://api.tvmaze.com/lookup/shows?imdb=" + loser_id

r = requests.get(url)

# convert the return data to a dictionary

json_data = r.json()

# load a temp datafram with the dictionary, then append to the composite dataframe

temp_df = pd.DataFrame.from_dict(json_data, orient='index', dtype=None)

ttemp_df = temp_df.T # Was not able to load json in column orientation, so must transpose

losers = losers.append(ttemp_df, ignore_index=True)

except:

print loser_id, " could not be retrieved from api"

losers.head()

tt0465347

tt0465347 could not be retrieved from api

tt4427122

tt4427122 could not be retrieved from api

tt1015682

tt1015682 could not be retrieved from api

tt2505738

tt2505738 could not be retrieved from api

tt2402465

tt2402465 could not be retrieved from api

tt0278236

tt0278236 could not be retrieved from api

tt0268066

tt0268066 could not be retrieved from api

tt4813760

tt4813760 could not be retrieved from api

tt1526001

tt1526001 could not be retrieved from api

tt1243976

tt1243976 could not be retrieved from api

tt2058498

tt3897284

tt3897284 could not be retrieved from api

tt3665690

tt3665690 could not be retrieved from api

tt4132180

tt4132180 could not be retrieved from api

tt0824229

tt0824229 could not be retrieved from api

tt0314990

tt0314990 could not be retrieved from api

tt5423750

tt5423750 could not be retrieved from api

tt5423664

tt5423664 could not be retrieved from api

tt2175125

tt2175125 could not be retrieved from api

tt0404593

tt0404593 could not be retrieved from api

tt4160422

tt4160422 could not be retrieved from api

tt4552562

tt4552562 could not be retrieved from api

tt5804854

tt5804854 could not be retrieved from api

tt0886666

tt0886666 could not be retrieved from api

tt5423824

tt5423824 could not be retrieved from api

tt3500210

tt3500210 could not be retrieved from api

tt0285357

tt0285357 could not be retrieved from api

tt0280234

tt0280234 could not be retrieved from api

tt1863530

tt1863530 could not be retrieved from api

tt0280349

tt0280349 could not be retrieved from api

tt2660922

tt2660922 could not be retrieved from api

tt0292776

tt0292776 could not be retrieved from api

tt4566242

tt0264230

tt0264230 could not be retrieved from api

tt1102523

tt1102523 could not be retrieved from api

tt3333790

tt3333790 could not be retrieved from api

tt0320863

tt0320863 could not be retrieved from api

tt0830848

tt0830848 could not be retrieved from api

tt0939270

tt0939270 could not be retrieved from api

tt1459294

tt1459294 could not be retrieved from api

tt6026132

tt6026132 could not be retrieved from api

tt1443593

tt1443593 could not be retrieved from api

tt0354267

tt0354267 could not be retrieved from api

tt0147749

tt0147749 could not be retrieved from api

tt0161180

tt0161180 could not be retrieved from api

tt4733812

tt4733812 could not be retrieved from api

tt0367362

tt0367362 could not be retrieved from api

tt5626868

tt5626868 could not be retrieved from api

tt7268752

tt7268752 could not be retrieved from api

tt1364951

tt2341819

tt0464767

tt0464767 could not be retrieved from api

tt3550770

tt3550770 could not be retrieved from api

tt6422012

tt6422012 could not be retrieved from api

tt3154248

tt3154248 could not be retrieved from api

tt5016274

tt5016274 could not be retrieved from api

tt1715229

tt1715229 could not be retrieved from api

tt0489426

tt0489426 could not be retrieved from api

tt5798754

tt5798754 could not be retrieved from api

tt2022182

tt2022182 could not be retrieved from api

tt0303564

tt0303564 could not be retrieved from api

tt3462252

tt3462252 could not be retrieved from api

tt0329849

tt0329849 could not be retrieved from api

tt5074180

tt5074180 could not be retrieved from api

tt3900878

tt3900878 could not be retrieved from api

tt3887402

tt3887402 could not be retrieved from api

tt1893088

tt0445890

tt0149408

tt0149408 could not be retrieved from api

tt1360544

tt1360544 could not be retrieved from api

tt1718355

tt1718355 could not be retrieved from api

tt2364950

tt2364950 could not be retrieved from api

tt2279571

tt0285374

tt0285374 could not be retrieved from api

tt5267590

tt5267590 could not be retrieved from api

tt0314993

tt0314993 could not be retrieved from api

tt0300870

tt0300870 could not be retrieved from api

tt7036530

tt7036530 could not be retrieved from api

tt5657014

tt5657014 could not be retrieved from api

tt0149488

tt0149488 could not be retrieved from api

tt1204865

tt1204865 could not be retrieved from api

tt1182860

tt1182860 could not be retrieved from api

tt0423626

tt0423626 could not be retrieved from api

tt4223864

tt4223864 could not be retrieved from api

tt1773440

tt1773440 could not be retrieved from api

tt0872067

tt0872067 could not be retrieved from api

tt0428172

tt0428172 could not be retrieved from api

tt0817379

tt0817379 could not be retrieved from api

tt1210720

tt1210720 could not be retrieved from api

tt3855028

tt3855028 could not be retrieved from api

tt1611594

tt1611594 could not be retrieved from api

tt5822004

tt5822004 could not be retrieved from api

tt6524930

tt6524930 could not be retrieved from api

tt1733734

tt1902032

tt1902032 could not be retrieved from api

tt0466201

tt0466201 could not be retrieved from api

tt1757293

tt1757293 could not be retrieved from api

tt1807575

tt1807575 could not be retrieved from api

tt0332896

tt0332896 could not be retrieved from api

tt3140278

tt3140278 could not be retrieved from api

tt1176297

tt1176297 could not be retrieved from api

tt0285406

tt0285406 could not be retrieved from api

tt6680212

tt6680212 could not be retrieved from api

tt0200336

tt0200336 could not be retrieved from api

tt0385483

tt0385483 could not be retrieved from api

tt3534894

tt3534894 could not be retrieved from api

tt1108281

tt1108281 could not be retrieved from api

tt3855016

tt3855016 could not be retrieved from api

tt0787948

tt0787948 could not be retrieved from api

tt1372153

tt1292967

tt1292967 could not be retrieved from api

tt1466565

tt1466565 could not be retrieved from api

tt0435565

tt0435565 could not be retrieved from api

tt1817054

tt2879822

tt1229266

tt1229266 could not be retrieved from api

tt0364837

tt0364837 could not be retrieved from api

tt0477409

tt0477409 could not be retrieved from api

tt0875097

tt0875097 could not be retrieved from api

tt1227542

tt1227542 could not be retrieved from api

tt1131289

tt1131289 could not be retrieved from api

tt0355135

tt0355135 could not be retrieved from api

tt1418598

tt0290970

tt0290970 could not be retrieved from api

tt0184124

tt0184124 could not be retrieved from api

tt0490736

tt0490736 could not be retrieved from api

tt0439354

tt0439354 could not be retrieved from api

tt1157935

tt1157935 could not be retrieved from api

tt1425641

tt1425641 could not be retrieved from api

tt2830404

tt2830404 could not be retrieved from api

tt0835397

tt0835397 could not be retrieved from api

tt0880581

tt0880581 could not be retrieved from api

tt1078463

tt1078463 could not be retrieved from api

tt0190177

tt1234506

tt1234506 could not be retrieved from api

tt0323463

tt0323463 could not be retrieved from api

tt5047510

tt5338860

tt5168468

tt5168468 could not be retrieved from api

tt0296322

tt0296322 could not be retrieved from api

tt3911254

tt3911254 could not be retrieved from api

tt3827516

tt3827516 could not be retrieved from api

tt0364899

tt0364899 could not be retrieved from api

tt4204032

tt4204032 could not be retrieved from api

tt0259768

tt0259768 could not be retrieved from api

tt0287880

tt0287880 could not be retrieved from api

tt0270763

tt0270763 could not be retrieved from api

tt0846349

tt0846349 could not be retrieved from api

tt2699648

tt2699648 could not be retrieved from api

tt3616368

tt3616368 could not be retrieved from api

tt2672920

tt2672920 could not be retrieved from api

tt1848281

tt0813074

tt0813074 could not be retrieved from api

tt1694422

tt1694422 could not be retrieved from api

tt0472241

tt0472241 could not be retrieved from api

tt0202186

tt0202186 could not be retrieved from api

tt1297366

tt1297366 could not be retrieved from api

tt3919918

tt3919918 could not be retrieved from api

tt1564985

tt1564985 could not be retrieved from api

tt3336800

tt3336800 could not be retrieved from api

tt6839504

tt2114184

tt2254454

tt2254454 could not be retrieved from api

tt1674023

tt0824737

tt0824737 could not be retrieved from api

tt1288431

tt1288431 could not be retrieved from api

tt1705811

tt1705811 could not be retrieved from api

tt0968726

tt0968726 could not be retrieved from api

tt2058840

tt2058840 could not be retrieved from api

tt1971860

tt3857708

tt3857708 could not be retrieved from api

tt0315030

tt0315030 could not be retrieved from api

tt2337185

tt2337185 could not be retrieved from api

tt0775356

tt0775356 could not be retrieved from api

tt0244356

tt0244356 could not be retrieved from api

tt2338400

tt2338400 could not be retrieved from api

tt0220047

tt0220047 could not be retrieved from api

tt0341789

tt0341789 could not be retrieved from api

tt0197151

tt0197151 could not be retrieved from api

tt0222529

tt0222529 could not be retrieved from api

tt6086050

tt6086050 could not be retrieved from api

tt3100634

tt1625263

tt1625263 could not be retrieved from api

tt2289244

tt2289244 could not be retrieved from api

tt1936732

tt0278229

tt0278229 could not be retrieved from api

tt0429438

tt0429438 could not be retrieved from api

tt1410490

tt1410490 could not be retrieved from api

tt5588910

tt5588910 could not be retrieved from api

tt3670858

tt3670858 could not be retrieved from api

tt1197582

tt0397182

tt0397182 could not be retrieved from api

tt1911975

tt1911975 could not be retrieved from api

tt0420366

tt0420366 could not be retrieved from api

tt3079034

tt3079034 could not be retrieved from api

tt0859270

tt0859270 could not be retrieved from api

tt0050070

tt0050070 could not be retrieved from api

tt0300798

tt0300798 could not be retrieved from api

tt5915502

tt5915502 could not be retrieved from api

tt6697244

tt6697244 could not be retrieved from api

tt1776388

tt1776388 could not be retrieved from api

tt0424639

tt0424639 could not be retrieved from api

tt1119204

tt1119204 could not be retrieved from api

tt1744868

tt1744868 could not be retrieved from api

tt1588824

tt1588824 could not be retrieved from api

tt1485389

tt3696798

tt3696798 could not be retrieved from api

tt0301123

tt0301123 could not be retrieved from api

tt1018436

tt1018436 could not be retrieved from api

tt0815776

tt0815776 could not be retrieved from api

tt0407462

tt0407462 could not be retrieved from api

tt0198147

tt0198147 could not be retrieved from api

tt0997412

tt0997412 could not be retrieved from api

tt2288050

tt1612920

tt0402701

tt5047494

tt5047494 could not be retrieved from api

tt5368216

tt5368216 could not be retrieved from api

tt3356610

tt3356610 could not be retrieved from api

tt0491735

tt1454750

tt1454750 could not be retrieved from api

tt5891726

tt5891726 could not be retrieved from api

tt2369946

tt4286824

tt4286824 could not be retrieved from api

tt0476926

tt0476926 could not be retrieved from api

tt5167034

tt5167034 could not be retrieved from api

tt0056759

tt0056759 could not be retrieved from api

tt3622818

tt3622818 could not be retrieved from api

tt0887788

tt0887788 could not be retrieved from api

tt4588620

tt4588620 could not be retrieved from api

tt0258341

tt0258341 could not be retrieved from api

tt0489430

tt0489430 could not be retrieved from api

tt2567210

tt2567210 could not be retrieved from api

tt0990403

tt4674178

tt4674178 could not be retrieved from api

tt0125638

tt0125638 could not be retrieved from api

tt5146640

tt5146640 could not be retrieved from api

tt0196284

tt0196284 could not be retrieved from api

tt3075154

tt3075154 could not be retrieved from api

tt0436003

tt0436003 could not be retrieved from api

tt1538090

tt1538090 could not be retrieved from api

tt1728226

tt1728226 could not be retrieved from api

tt3796070

tt3796070 could not be retrieved from api

tt1381395

tt1381395 could not be retrieved from api

tt0190199

tt0190199 could not be retrieved from api

tt0855213

tt0855213 could not be retrieved from api

tt0358890

tt0358890 could not be retrieved from api

tt3484986

tt3484986 could not be retrieved from api

tt2208507

tt2208507 could not be retrieved from api

tt4896052

tt4896052 could not be retrieved from api

tt6148376

tt0217211

tt0217211 could not be retrieved from api

tt0430836

tt0430836 could not be retrieved from api

tt1429551

tt1291098

tt1291098 could not be retrieved from api

tt0399968

tt0399968 could not be retrieved from api

tt2909920

tt2909920 could not be retrieved from api

tt3164276

tt3164276 could not be retrieved from api

tt1586637

tt4873032

tt0926012

tt0926012 could not be retrieved from api

tt1305560

tt1305560 could not be retrieved from api

tt1291488

tt1291488 could not be retrieved from api

tt0428088

tt0428088 could not be retrieved from api

tt1057469

tt1057469 could not be retrieved from api

tt3807326

tt3807326 could not be retrieved from api

tt3293566

tt0410964

tt1579186

tt0271931

tt6519752

tt1417358

tt4568130

tt1705611

tt2235190

tt0244328

tt0244328 could not be retrieved from api

tt0459155

tt0459155 could not be retrieved from api

tt1890984

tt1890984 could not be retrieved from api

tt0460381

tt0460381 could not be retrieved from api

tt0439069

tt0439069 could not be retrieved from api

tt0329817

tt0329817 could not be retrieved from api

tt1805082

tt1805082 could not be retrieved from api

tt0468985

tt0468985 could not be retrieved from api

tt1071166

tt1071166 could not be retrieved from api

tt1634699

tt1634699 could not be retrieved from api

tt1086761

tt4214468

tt0170930

tt0170930 could not be retrieved from api

tt5937940

tt0305056

tt1024887

tt1024887 could not be retrieved from api

tt1833558

tt7062438

tt7062438 could not be retrieved from api

tt4411548

tt4411548 could not be retrieved from api

tt0105970

tt0105970 could not be retrieved from api

tt0348949

tt0348949 could not be retrieved from api

tt2309197

tt2309197 could not be retrieved from api

tt0327271

tt0327271 could not be retrieved from api

tt1729597

tt1729597 could not be retrieved from api

tt0428108

tt0428108 could not be retrieved from api

tt3144026

tt3144026 could not be retrieved from api

tt0292770

tt0077041

tt1489024

tt0458269

tt1020924

tt0444578

tt0787980

tt0249275

tt1280868

tt0462121

tt3136086

tt1908157

tt0055714

tt0781991

tt0224517

tt0426804

tt0484508

tt0186742

tt0460081

tt0320809

tt0798631

tt3119834

tt3804586

tt0479614

tt0479614 could not be retrieved from api

tt0780447

tt0780447 could not be retrieved from api

tt0123366

tt3481544

tt3975956

tt3975956 could not be retrieved from api

tt5335110

tt0471990

tt0471990 could not be retrieved from api

tt1332074

tt6846846

tt6846846 could not be retrieved from api

tt1259798

tt0381741

tt0381741 could not be retrieved from api

tt2953706

tt1244881

tt6208480

tt6208480 could not be retrieved from api

tt1232190

tt0829040

tt0829040 could not be retrieved from api

tt3859844

tt1761662

tt1761662 could not be retrieved from api

tt2262354

tt0103411

tt0103411 could not be retrieved from api

tt0356281

tt0356281 could not be retrieved from api

tt4628798

tt4628798 could not be retrieved from api

tt0283714

tt1147702

tt1147702 could not be retrieved from api

tt0780444

tt0780444 could not be retrieved from api

tt1981147

tt0756524

tt0312095

tt0260645

tt1728958

tt4688354

tt1296242

tt1062211

tt1500453

tt0358320

tt1118205

tt0480781

tt0303490

tt0278256

tt0812148

tt0892683

tt1562042

tt0218767

tt2265901

tt1456074

tt1978967

tt0313038

tt5437800

tt5437800 could not be retrieved from api

tt2453016

tt5209238

tt5209238 could not be retrieved from api

tt7165310

tt7165310 could not be retrieved from api

tt1277979

tt0362379

tt0362379 could not be retrieved from api

tt0348512

tt0348512 could not be retrieved from api

tt1024814

tt0065343

tt0065343 could not be retrieved from api

tt3976016

tt3976016 could not be retrieved from api

tt1459376

tt1459376 could not be retrieved from api

tt4629950

tt4629950 could not be retrieved from api

tt0443361

tt0443361 could not be retrieved from api

tt1320317

tt1320317 could not be retrieved from api

tt1770959

tt6212410

tt6212410 could not be retrieved from api

tt3731648

tt5872774

tt5872774 could not be retrieved from api

tt4410468

tt0196232

tt0196232 could not be retrieved from api

tt3693866

tt3693866 could not be retrieved from api

tt6295148

tt6295148 could not be retrieved from api

tt0804424

tt0804424 could not be retrieved from api

tt0458252

tt0458252 could not be retrieved from api

tt2933730

tt2933730 could not be retrieved from api

tt5690306

tt5690306 could not be retrieved from api

tt3038492

tt0854912

tt0426740

tt0364787

tt1033281

tt0473416

tt5423592

tt2064427

tt1208634

tt0402660

tt1566044

tt0292845

tt2633208

tt1685317

tt0421158

tt1176154

tt3099832

tt0396337

tt0337790

tt0287847

tt0421343

tt0408364

tt0346300

tt0346300 could not be retrieved from api

tt2908564

tt2908564 could not be retrieved from api

tt0348894

tt6959064

tt6959064 could not be retrieved from api

tt1737565

tt1454730

tt0468999

tt1495163

tt2514488

tt2390003

tt0293725

tt0293725 could not be retrieved from api

tt0092362

tt0092362 could not be retrieved from api

tt0818895

tt0818895 could not be retrieved from api

tt1509653

tt1509653 could not be retrieved from api

tt1809909

tt1809909 could not be retrieved from api

tt1796975

tt1796975 could not be retrieved from api

tt6501522

tt6501522 could not be retrieved from api

tt0424611

tt0424611 could not be retrieved from api

tt0439932

tt0439932 could not be retrieved from api

tt4671004

tt0471048

tt0471048 could not be retrieved from api

tt1156526

tt1156526 could not be retrieved from api

tt0264226

tt0264226 could not be retrieved from api

tt1170222

tt1170222 could not be retrieved from api

tt2689384

tt0295081

tt0295081 could not be retrieved from api

tt4369244

tt4369244 could not be retrieved from api

tt2781594

tt2781594 could not be retrieved from api

tt4662374

tt1105316

tt1105316 could not be retrieved from api

tt3840030

tt3840030 could not be retrieved from api

tt2579722

tt0072546

tt4628790

tt0046590

tt2184509

tt0497854

tt0363323

tt1458207

tt0439356

tt0377146

tt0954318

tt2214505

tt2435530

tt0473419

tt0768151

tt0439365

tt0278177

tt1299440

tt2083701

tt1933836

tt6473824

tt6473824 could not be retrieved from api

tt0187632

tt0187632 could not be retrieved from api

tt4033696

tt0391666

tt0391666 could not be retrieved from api

tt0465344

tt0465344 could not be retrieved from api

tt2170392

tt4390084

tt2189892

tt2189892 could not be retrieved from api

tt6586510

tt6586510 could not be retrieved from api

tt3174316

tt2374870

tt2374870 could not be retrieved from api

tt2366111

tt2111994

tt2111994 could not be retrieved from api

tt4588734

tt4588734 could not be retrieved from api

tt0863047

tt0863047 could not be retrieved from api

tt1495648

tt1579108

tt1579108 could not be retrieved from api

tt1159610

tt0984168

tt0984168 could not be retrieved from api

tt6752226

tt6752226 could not be retrieved from api

tt0856723

tt0856723 could not be retrieved from api

tt0416347

tt0416347 could not be retrieved from api

tt5571740

tt5571740 could not be retrieved from api

tt1552185

tt1552185 could not be retrieved from api

tt3595870

tt1728864

tt1062185

tt0380949

tt1013861

tt0848174

tt0321000

tt1855738

tt0363335

tt0420381

tt1814550

tt1987353

tt0187654

tt1461569

tt1850160

tt0954661

tt0198095

tt4012388

tt0482028

tt0176381

tt0419307

tt1684732

tt5154762

tt3139774

tt0819708

tt0819708 could not be retrieved from api

tt0888280

tt0888280 could not be retrieved from api

tt6021260

tt6021260 could not be retrieved from api

tt0185065

tt0185065 could not be retrieved from api

tt4123482

tt1491299

tt1492090

tt6059298

tt6059298 could not be retrieved from api

tt1826951

tt0273025

tt0273025 could not be retrieved from api

tt1888795

tt1888795 could not be retrieved from api

tt1821879

tt1821879 could not be retrieved from api

tt2497788

tt0476038

tt0476038 could not be retrieved from api

tt1830924

tt1830924 could not be retrieved from api

tt1368470

tt1368470 could not be retrieved from api

tt1361721

tt1361721 could not be retrieved from api

tt2647792

tt2647792 could not be retrieved from api

tt3148194

tt0302163

tt0302163 could not be retrieved from api

tt5515342

tt0292859

tt0292859 could not be retrieved from api

tt0243082

tt0243082 could not be retrieved from api

tt4654650

tt4654650 could not be retrieved from api

tt0298682

tt0298682 could not be retrieved from api

tt1534856

tt1534856 could not be retrieved from api

tt3097134

tt3097134 could not be retrieved from api

tt2582840

tt2582840 could not be retrieved from api

tt4605154

tt1478217

tt1478217 could not be retrieved from api

tt0374366

tt1631948

tt0368494

tt1721347

tt5319670

tt1684855

tt5209280

tt6217260

tt6842890

tt5040090

tt3501210

tt0367323

tt0397012

tt0954837

tt1784056

tt3228548

tt0861753

tt0933898

tt0433705

tt0287845

tt0329816

tt0329816 could not be retrieved from api

tt2815342

tt3548386

tt3548386 could not be retrieved from api

tt0410958

tt0410958 could not be retrieved from api

tt0057740

tt0057740 could not be retrieved from api

tt5583124

tt5583124 could not be retrieved from api

tt1440045

tt1440045 could not be retrieved from api

tt0810737

tt0810737 could not be retrieved from api

tt0989753

tt0989753 could not be retrieved from api

tt1313075

tt1313075 could not be retrieved from api

tt1073528

tt1073528 could not be retrieved from api

tt0310516

tt0310516 could not be retrieved from api

tt1642103

tt1642103 could not be retrieved from api

tt0448973

tt0448973 could not be retrieved from api

tt0302098

tt0302098 could not be retrieved from api

tt0805368

tt0805368 could not be retrieved from api

tt1124662

tt1124662 could not be retrieved from api

tt0324891

tt0324891 could not be retrieved from api

tt0423631

tt0423631 could not be retrieved from api

tt2226096

tt2226096 could not be retrieved from api

tt0773264

tt1798695

tt1307083

tt4845734

tt0046641

tt0046641 could not be retrieved from api

tt1519575

tt1519575 could not be retrieved from api

tt0853078

tt0853078 could not be retrieved from api

tt0118423

tt0118423 could not be retrieved from api

tt0284767

tt4052124

tt4052124 could not be retrieved from api

tt0878801

tt3703500

# Oops, We've hit the API to hard. A second attempt to pull low rated show information

# will be needed, with a time delay to stay within API limitations.

# This shape is misleading, as many of the rows simply contain a message that the API limit

# had been exceeded

losers.shape

# This is accurate, 235 shows from the top show list were obtained

shows.shape

DO_NOT_RUN = True # Be sure to check the file name to write before enabling execution on this block

if not DO_NOT_RUN:

pickle.dump( losers, open( "save_losers_df.p", "wb" ) )

# read data back in from the saved file

losers2 = pickle.load( open( "save_losers_df.p", "rb" ) )

This is the start of a second attempt to pull more TV shows with low ratings

This is needed. After the first pull, and after cleanup, there were only 10 Shows left in the low rating category with complete information. The cells below collect more data from the API for additional low rated shows.

losers.loc[0:9]['externals']

0 {u'thetvdb': 283995, u'tvrage': 40425, u'imdb'...

1 {u'thetvdb': 299234, u'tvrage': 50418, u'imdb'...

2 {u'thetvdb': 118021, u'tvrage': None, u'imdb':...

3 {u'thetvdb': 274705, u'tvrage': 31580, u'imdb'...

4 {u'thetvdb': 246161, u'tvrage': None, u'imdb':...

5 {u'thetvdb': 75638, u'tvrage': None, u'imdb': ...

6 {u'thetvdb': 260183, u'tvrage': 31024, u'imdb'...

7 {u'thetvdb': None, u'tvrage': None, u'imdb': u...

8 {u'thetvdb': 299688, u'tvrage': None, u'imdb':...

9 {u'thetvdb': 222481, u'tvrage': None, u'imdb':...

Name: externals, dtype: object

# In the first attempt, there were a number of shows where data was not returned becuase of two many api calls

# in quick succession. In order to re-submit those show ids, it is necessary to get a list of ids that were

# returned successfully, and then to remove them from the original list of ids before resubmitting.

# losers_pulled is a list of ids that were successful on the previous attempt.

losers_pulled = []

no_imdb_at_idx = []

for i in range(len(losers)):

try:

losers_pulled.append(losers.loc[i,'externals']['imdb'])

except:

no_imdb_at_idx.append(i)

print no_imdb_at_idx

print

print losers_pulled

print len(losers_pulled)

[11, 35, 36, 37, 38, 39, 40, 41, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 110, 111, 112, 113, 114, 115, 116, 117, 118, 119, 120, 121, 122, 123, 124, 125, 126, 127, 128, 129, 130, 131, 142, 143, 144, 145, 146, 147, 148, 149, 150, 151, 152, 153, 154, 155, 156, 157, 158, 169, 170, 171, 172, 173, 174, 175, 176, 177, 178, 179, 180, 181, 182, 183, 184, 185, 186, 187, 188, 189, 190, 191, 192, 201, 202, 203, 204, 205, 206, 207, 208, 209, 210, 211, 212, 213, 214, 215, 216, 217, 218, 219, 220, 228]

[u'tt2058498', u'tt4566242', u'tt1364951', u'tt2341819', u'tt1893088', u'tt0445890', u'tt2279571', u'tt1733734', u'tt1372153', u'tt1817054', u'tt2879822', u'tt0190177', u'tt5047510', u'tt5338860', u'tt1848281', u'tt6839504', u'tt2114184', u'tt1674023', u'tt1971860', u'tt3100634', u'tt1936732', u'tt1197582', u'tt1485389', u'tt2288050', u'tt1612920', u'tt0402701', u'tt0491735', u'tt2369946', u'tt0990403', u'tt6148376', u'tt1429551', u'tt1586637', u'tt4873032', u'tt3293566', u'tt2235190', u'tt1086761', u'tt4214468', u'tt5937940', u'tt0305056', u'tt1833558', u'tt0123366', u'tt3481544', u'tt5335110', u'tt1332074', u'tt1259798', u'tt2953706', u'tt1244881', u'tt1232190', u'tt3859844', u'tt2262354', u'tt0283714', u'tt0313038', u'tt2453016', u'tt1277979', u'tt1024814', u'tt1770959', u'tt3731648', u'tt4410468', u'tt0348894', u'tt1737565', u'tt1454730', u'tt0468999', u'tt1495163', u'tt2514488', u'tt2390003', u'tt4671004', u'tt2689384', u'tt4662374', u'tt1299440', u'tt2083701', u'tt1933836', u'tt4033696', u'tt2170392', u'tt4390084', u'tt3174316', u'tt2366111', u'tt1495648', u'tt1159610', u'tt4123482', u'tt1491299', u'tt1492090', u'tt1826951', u'tt2497788', u'tt3148194', u'tt5515342', u'tt4605154', u'tt2815342', u'tt0773264', u'tt1798695', u'tt1307083', u'tt4845734', u'tt0284767', u'tt0878801']

93

# There were that do not even include their own imdb number, and indicator that the pull was unsuccessful

# While a few of these might have been successful but have only limited data, most are unusuable.

# Thus all will be re-requested at a slower rate and any duplicates removed when the data is merged.

print len(no_imdb_at_idx)

# This generates a list of the original requests that were not successfully returned from the api.

# First the will be requested again, using a time delay to avoid requesting more than the server

# will willingly return. They will also be batched in groups of 100 ids

missing_losers = [x for x in loser_list if x not in losers_pulled]

missing_losers

['tt0465347',

'tt4427122',

'tt1015682',

'tt2505738',

'tt2402465',

'tt0278236',

'tt0268066',

'tt4813760',

'tt1526001',

'tt1243976',

'tt3897284',

'tt3665690',

'tt4132180',

'tt0824229',

'tt0314990',

'tt5423750',

'tt5423664',

'tt2175125',

'tt0404593',

'tt4160422',

'tt4552562',

'tt5804854',

'tt0886666',

'tt5423824',

'tt3500210',

'tt0285357',

'tt0280234',

'tt1863530',

'tt0280349',

'tt2660922',

'tt0292776',

'tt0264230',

'tt1102523',

'tt3333790',

'tt0320863',

'tt0830848',

'tt0939270',

'tt1459294',

'tt6026132',

'tt1443593',

'tt0354267',

'tt0147749',

'tt0161180',

'tt4733812',

'tt0367362',

'tt5626868',

'tt7268752',

'tt0464767',

'tt3550770',

'tt6422012',

'tt3154248',

'tt5016274',

'tt1715229',

'tt0489426',

'tt5798754',

'tt2022182',

'tt0303564',

'tt3462252',

'tt0329849',

'tt5074180',

'tt3900878',

'tt3887402',

'tt0149408',

'tt1360544',

'tt1718355',

'tt2364950',

'tt0285374',

'tt5267590',

'tt0314993',

'tt0300870',

'tt7036530',

'tt5657014',

'tt0149488',

'tt1204865',

'tt1182860',

'tt0423626',

'tt4223864',

'tt1773440',

'tt0872067',

'tt0428172',

'tt0817379',

'tt1210720',

'tt3855028',

'tt1611594',

'tt5822004',

'tt6524930',

'tt1902032',

'tt0466201',

'tt1757293',

'tt1807575',

'tt0332896',

'tt3140278',

'tt1176297',

'tt0285406',

'tt6680212',

'tt0200336',

'tt0385483',

'tt3534894',

'tt1108281',

'tt3855016',

'tt0787948',

'tt1292967',

'tt1466565',

'tt0435565',

'tt1229266',

'tt0364837',

'tt0477409',

'tt0875097',

'tt1227542',

'tt1131289',

'tt0355135',

'tt1418598',

'tt0290970',

'tt0184124',

'tt0490736',

'tt0439354',

'tt1157935',

'tt1425641',

'tt2830404',

'tt0835397',

'tt0880581',

'tt1078463',

'tt1234506',

'tt0323463',

'tt5168468',

'tt0296322',

'tt3911254',

'tt3827516',

'tt0364899',

'tt4204032',

'tt0259768',

'tt0287880',

'tt0270763',

'tt0846349',

'tt2699648',

'tt3616368',

'tt2672920',

'tt0813074',

'tt1694422',

'tt0472241',

'tt0202186',

'tt1297366',

'tt3919918',

'tt1564985',

'tt3336800',

'tt2254454',

'tt0824737',

'tt1288431',

'tt1705811',

'tt0968726',

'tt2058840',

'tt3857708',

'tt0315030',

'tt2337185',

'tt0775356',

'tt0244356',

'tt2338400',

'tt0220047',

'tt0341789',

'tt0197151',

'tt0222529',

'tt6086050',

'tt1625263',

'tt2289244',

'tt0278229',

'tt0429438',

'tt1410490',

'tt5588910',

'tt3670858',

'tt0397182',

'tt1911975',

'tt0420366',

'tt3079034',

'tt0859270',

'tt0050070',

'tt0300798',

'tt5915502',

'tt6697244',

'tt1776388',

'tt0424639',

'tt1119204',

'tt1744868',

'tt1588824',

'tt3696798',

'tt0301123',

'tt1018436',

'tt0815776',

'tt0407462',

'tt0198147',

'tt0997412',

'tt5047494',

'tt5368216',

'tt3356610',

'tt1454750',

'tt5891726',

'tt4286824',

'tt0476926',

'tt5167034',

'tt0056759',

'tt3622818',

'tt0887788',

'tt4588620',

'tt0258341',

'tt0489430',

'tt2567210',

'tt4674178',

'tt0125638',

'tt5146640',

'tt0196284',

'tt3075154',

'tt0436003',

'tt1538090',

'tt1728226',

'tt3796070',

'tt1381395',

'tt0190199',

'tt0855213',

'tt0358890',

'tt3484986',

'tt2208507',

'tt4896052',

'tt0217211',

'tt0430836',

'tt1291098',

'tt0399968',

'tt2909920',

'tt3164276',

'tt0926012',

'tt1305560',

'tt1291488',

'tt0428088',

'tt1057469',

'tt3807326',

'tt0410964',

'tt1579186',

'tt0271931',

'tt6519752',

'tt1417358',

'tt4568130',

'tt1705611',

'tt0244328',

'tt0459155',

'tt1890984',

'tt0460381',

'tt0439069',

'tt0329817',

'tt1805082',

'tt0468985',

'tt1071166',

'tt1634699',

'tt0170930',

'tt1024887',

'tt7062438',

'tt4411548',

'tt0105970',

'tt0348949',

'tt2309197',

'tt0327271',

'tt1729597',

'tt0428108',

'tt3144026',

'tt0292770',

'tt0077041',

'tt1489024',

'tt0458269',

'tt1020924',

'tt0444578',

'tt0787980',

'tt0249275',

'tt1280868',

'tt0462121',

'tt3136086',

'tt1908157',

'tt0055714',

'tt0781991',

'tt0224517',

'tt0426804',

'tt0484508',

'tt0186742',

'tt0460081',

'tt0320809',

'tt0798631',

'tt3119834',

'tt3804586',

'tt0479614',

'tt0780447',

'tt3975956',

'tt0471990',

'tt6846846',

'tt0381741',

'tt6208480',

'tt0829040',

'tt1761662',

'tt0103411',

'tt0356281',

'tt4628798',

'tt1147702',

'tt0780444',

'tt1981147',

'tt0756524',

'tt0312095',

'tt0260645',

'tt1728958',

'tt4688354',

'tt1296242',

'tt1062211',

'tt1500453',

'tt0358320',

'tt1118205',

'tt0480781',

'tt0303490',

'tt0278256',

'tt0812148',

'tt0892683',

'tt1562042',

'tt0218767',

'tt2265901',

'tt1456074',

'tt1978967',

'tt5437800',

'tt5209238',

'tt7165310',

'tt0362379',

'tt0348512',

'tt0065343',

'tt3976016',

'tt1459376',

'tt4629950',

'tt0443361',

'tt1320317',

'tt6212410',

'tt5872774',

'tt0196232',

'tt3693866',

'tt6295148',

'tt0804424',

'tt0458252',

'tt2933730',

'tt5690306',

'tt3038492',

'tt0854912',

'tt0426740',

'tt0364787',

'tt1033281',

'tt0473416',

'tt5423592',

'tt2064427',

'tt1208634',

'tt0402660',

'tt1566044',

'tt0292845',

'tt2633208',

'tt1685317',

'tt0421158',

'tt1176154',

'tt3099832',

'tt0396337',

'tt0337790',

'tt0287847',

'tt0421343',

'tt0408364',

'tt0346300',

'tt2908564',

'tt6959064',

'tt0293725',

'tt0092362',

'tt0818895',

'tt1509653',

'tt1809909',

'tt1796975',

'tt6501522',

'tt0424611',

'tt0439932',

'tt0471048',

'tt1156526',

'tt0264226',

'tt1170222',

'tt0295081',

'tt4369244',

'tt2781594',

'tt1105316',

'tt3840030',

'tt2579722',

'tt0072546',

'tt4628790',

'tt0046590',

'tt2184509',

'tt0497854',

'tt0363323',

'tt1458207',

'tt0439356',

'tt0377146',

'tt0954318',

'tt2214505',

'tt2435530',

'tt0473419',

'tt0768151',

'tt0439365',

'tt0278177',

'tt6473824',

'tt0187632',

'tt0391666',

'tt0465344',

'tt2189892',

'tt6586510',

'tt2374870',

'tt2111994',

'tt4588734',

'tt0863047',

'tt1579108',

'tt0984168',

'tt6752226',

'tt0856723',

'tt0416347',

'tt5571740',

'tt1552185',

'tt3595870',

'tt1728864',

'tt1062185',

'tt0380949',

'tt1013861',

'tt0848174',

'tt0321000',

'tt1855738',

'tt0363335',

'tt0420381',

'tt1814550',

'tt1987353',

'tt0187654',

'tt1461569',

'tt1850160',

'tt0954661',

'tt0198095',

'tt4012388',

'tt0482028',

'tt0176381',

'tt0419307',

'tt1684732',

'tt5154762',

'tt3139774',

'tt0819708',

'tt0888280',

'tt6021260',

'tt0185065',

'tt6059298',

'tt0273025',

'tt1888795',

'tt1821879',

'tt0476038',

'tt1830924',

'tt1368470',

'tt1361721',

'tt2647792',

'tt0302163',

'tt0292859',

'tt0243082',

'tt4654650',

'tt0298682',

'tt1534856',

'tt3097134',

'tt2582840',

'tt1478217',

'tt0374366',

'tt1631948',

'tt0368494',

'tt1721347',

'tt5319670',

'tt1684855',

'tt5209280',

'tt6217260',

'tt6842890',

'tt5040090',

'tt3501210',

'tt0367323',

'tt0397012',

'tt0954837',

'tt1784056',

'tt3228548',

'tt0861753',

'tt0933898',

'tt0433705',

'tt0287845',

'tt0329816',

'tt3548386',

'tt0410958',

'tt0057740',

'tt5583124',

'tt1440045',

'tt0810737',

'tt0989753',

'tt1313075',

'tt1073528',

'tt0310516',

'tt1642103',

'tt0448973',

'tt0302098',

'tt0805368',

'tt1124662',

'tt0324891',

'tt0423631',

'tt2226096',

'tt0046641',

'tt1519575',

'tt0853078',

'tt0118423',

'tt4052124',

'tt3703500']

# This processes the oringinal list of 600 ids, minus the ones that were successfully pulled,

# into groups of 100 + 7 in last list

# break up the missing list into groups of 100

subset_loser_list = []

print len(missing_losers)

for i in range(len(missing_losers)/100):

temp_list = []

for j in range(100):

temp_list.append(missing_losers[i*100 + j])

subset_loser_list.append(temp_list)

# get last 7

for j in range(500, len(missing_losers)):

temp_list = []

for j in range(500, len(missing_losers)):

temp_list.append(missing_losers[j])

# After reprocessing the first list of ids a 2nd time, there are still not enough samples of low rated shows

# A third list of 600 low rated shows was scraped from IMDB, and this list is broken into subsets of 100 here

subset_loser_list2 = []

print len(loser_list)

for i in range(len(loser_list)/100):

temp_list = []

for j in range(100):

temp_list.append(loser_list[i*100 + j])

subset_loser_list2.append(temp_list)

['tt0773264',

'tt1798695',

'tt1307083',

'tt4845734',

'tt0046641',

'tt1519575',

'tt0853078',

'tt0118423',

'tt0284767',

'tt4052124',

'tt0878801',

'tt3703500',

'tt1105170',

'tt4363582',

'tt3155428',

'tt0362350',

'tt0287196',

'tt2766052',

'tt0405545',

'tt0262975',

'tt0367278',

'tt7134262',

'tt1695352',

'tt0421470',

'tt2466890',

'tt0343305',

'tt1002739',

'tt1615697',

'tt0274262',

'tt0465320',

'tt1388381',

'tt0358889',

'tt1085789',

'tt1011591',

'tt0364804',

'tt1489335',

'tt3612584',

'tt0363377',

'tt0111930',

'tt0401913',

'tt0808086',

'tt0309212',

'tt5464192',

'tt0080250',

'tt4533338',

'tt4741696',

'tt1922810',

'tt1793868',

'tt4789316',

'tt0185054',

'tt1079622',

'tt1786048',

'tt0790508',

'tt1716372',

'tt0295098',

'tt3409706',

'tt0222574',

'tt2171325',

'tt0442643',

'tt2142117',

'tt0371433',

'tt0138244',

'tt1002010',

'tt0495557',

'tt1811817',

'tt5529996',

'tt1352053',

'tt0439346',

'tt0940147',

'tt3075138',

'tt1974439',

'tt2693842',

'tt0092325',

'tt6772826',

'tt1563069',

'tt0489598',

'tt0142055',

'tt1566154',

'tt0338592',

'tt0167515',

'tt2330327',

'tt1576464',

'tt2389845',

'tt0186747',

'tt0355096',

'tt1821877',

'tt0112033',

'tt1792654',

'tt0472243',

'tt6453018',

'tt3648886',

'tt1599374',

'tt2946482',

'tt4672020',

'tt1016283',

'tt2649480',

'tt1229945',

'tt2390606',

'tt1876612',

'tt0140732']

# This block calls the API. It is run repeatedly with each new sublist of 100 show ids, sleeping 10

# seconds between each request. There is a do not run flag that will prevent running this block if the

# notebook is restarted. The first time it was executed, a new dataframe called "more_losers" was initialized,

# and then commented out for subsequent executions so the data returned in eacn subsequent data request will

# be appended to the bottom of the dataframe.

# After collection is complete, set flag to prevent running this block unnecessarily if notebook is restarted

import time

DO_NOT_RUN = True

if not DO_NOT_RUN:

# responses = []

# more_losers = pd.DataFrame()

for loser_id in subset_loser_list2[0]: # change the index and re-run to accesses each set of 100 ids

time.sleep(10)

try:

# Get the tv show info from the api

url = "http://api.tvmaze.com/lookup/shows?imdb=" + loser_id

r = requests.get(url)

# convert the return data to a dictionary

json_data = r.json()

# load a temp datafram with the dictionary, then append to the composite dataframe

temp_df = pd.DataFrame.from_dict(json_data, orient='index', dtype=None)

ttemp_df = temp_df.T # Was not able to load json in column orientation, so must transpose

more_losers = more_losers.append(ttemp_df, ignore_index=True)

stat = ''

except:

stat = 'failed'

print loser_id, stat, r.status_code

res = [loser_id, stat, r.status_code]

responses.append(res)

losers.head()

tt0773264 200

tt1798695 200

tt1307083 200

tt4845734 200

tt0046641 failed 404

tt1519575 failed 404

tt0853078 failed 404

tt0118423 failed 404

tt0284767 200

tt4052124 failed 404

tt0878801 200

tt3703500 200

tt1105170 failed 404

tt4363582 failed 404

tt3155428 200

tt0362350 failed 404

tt0287196 200

tt2766052 200

tt0405545 failed 404

tt0262975 200

tt0367278 failed 404

tt7134262 failed 404

tt1695352 failed 404

tt0421470 failed 404

tt2466890 failed 404

tt0343305 failed 404

tt1002739 failed 404

tt1615697 failed 404

tt0274262 failed 404

tt0465320 failed 404

tt1388381 200

tt0358889 200

tt1085789 failed 404

tt1011591 200

tt0364804 failed 404

tt1489335 failed 404

tt3612584 200

tt0363377 failed 404

tt0111930 failed 404

tt0401913 failed 404

tt0808086 failed 404

tt0309212 failed 404

tt5464192 200

tt0080250 failed 404

tt4533338 failed 404

tt4741696 200

tt1922810 failed 404

tt1793868 failed 404

tt4789316 failed 404

tt0185054 failed 404

tt1079622 failed 404

tt1786048 failed 404

tt0790508 failed 404

tt1716372 failed 404

tt0295098 failed 404

tt3409706 failed 404

tt0222574 failed 404

tt2171325 failed 404

tt0442643 failed 404

tt2142117 failed 404

tt0371433 failed 404

tt0138244 failed 404

tt1002010 failed 404

tt0495557 failed 404

tt1811817 failed 404

tt5529996 failed 404

tt1352053 failed 404

tt0439346 failed 404

tt0940147 failed 404

tt3075138 failed 404

tt1974439 200

tt2693842 failed 404

tt0092325 200

tt6772826 200

tt1563069 200

tt0489598 200

tt0142055 failed 404

tt1566154 200

tt0338592 200

tt0167515 200

tt2330327 200

tt1576464 failed 404

tt2389845 failed 404

tt0186747 200

tt0355096 failed 404

tt1821877 200

tt0112033 failed 404

tt1792654 failed 404

tt0472243 failed 404

tt6453018 failed 404

tt3648886 failed 404

tt1599374 200

tt2946482 200

tt4672020 failed 404

tt1016283 failed 404

tt2649480 200

tt1229945 200

tt2390606 failed 404

tt1876612 200

tt0140732 failed 404

for i in range(len(more_losers)):

print more_losers.loc[i, 'externals']

{u'thetvdb': 279947, u'tvrage': 37045, u'imdb': u'tt3595870'}

{u'thetvdb': None, u'tvrage': 13173, u'imdb': u'tt0848174'}

{u'thetvdb': 72157, u'tvrage': None, u'imdb': u'tt0374366'}

{u'thetvdb': 218241, u'tvrage': None, u'imdb': u'tt1684855'}

{u'thetvdb': 327908, u'tvrage': None, u'imdb': u'tt6842890'}

{u'thetvdb': 279810, u'tvrage': None, u'imdb': u'tt3501210'}

{u'thetvdb': 283658, u'tvrage': None, u'imdb': u'tt0367323'}

{u'thetvdb': 271341, u'tvrage': 33650, u'imdb': u'tt2633208'}

{u'thetvdb': 260677, u'tvrage': None, u'imdb': u'tt2579722'}

{u'thetvdb': 77616, u'tvrage': None, u'imdb': u'tt0072546'}

{u'thetvdb': 74419, u'tvrage': None, u'imdb': u'tt0458269'}

{u'thetvdb': None, u'tvrage': None, u'imdb': u'tt0249275'}

{u'thetvdb': 282527, u'tvrage': 42189, u'imdb': u'tt2815184'}

{u'thetvdb': 246631, u'tvrage': None, u'imdb': u'tt1753229'}

{u'thetvdb': 82500, u'tvrage': None, u'imdb': u'tt1240534'}

{u'thetvdb': 206381, u'tvrage': 26873, u'imdb': u'tt1999642'}

{u'thetvdb': 284259, u'tvrage': None, u'imdb': u'tt3784176'}

{u'thetvdb': 250186, u'tvrage': None, u'imdb': u'tt1958848'}

{u'thetvdb': 320679, u'tvrage': None, u'imdb': u'tt5684430'}

{u'thetvdb': 74181, u'tvrage': 6494, u'imdb': u'tt0134269'}

{u'thetvdb': 84159, u'tvrage': 19672, u'imdb': u'tt1252370'}

{u'thetvdb': 300105, u'tvrage': 48178, u'imdb': u'tt3824018'}

{u'thetvdb': 264850, u'tvrage': None, u'imdb': u'tt2555880'}

{u'thetvdb': 277020, u'tvrage': 35629, u'imdb': u'tt3310544'}

{u'thetvdb': 254524, u'tvrage': 31887, u'imdb': u'tt2125758'}

{u'thetvdb': 271916, u'tvrage': None, u'imdb': u'tt1973047'}

{u'thetvdb': 82005, u'tvrage': None, u'imdb': u'tt0934701'}

{u'thetvdb': 250472, u'tvrage': None, u'imdb': u'tt2059031'}

{u'thetvdb': 81491, u'tvrage': None, u'imdb': u'tt1056536'}

{u'thetvdb': 137691, u'tvrage': None, u'imdb': u'tt1618950'}

{u'thetvdb': 74395, u'tvrage': 3883, u'imdb': u'tt0115206'}

{u'thetvdb': 298860, u'tvrage': 50010, u'imdb': u'tt4575056'}

{u'thetvdb': 269115, u'tvrage': 33511, u'imdb': u'tt2889104'}

{u'thetvdb': 285008, u'tvrage': None, u'imdb': u'tt2644204'}

{u'thetvdb': 82237, u'tvrage': None, u'imdb': u'tt1210781'}

{u'thetvdb': 314998, u'tvrage': None, u'imdb': u'tt0048898'}

{u'thetvdb': 276337, u'tvrage': None, u'imdb': u'tt3398108'}

{u'thetvdb': 221621, u'tvrage': None, u'imdb': u'tt1252620'}

{u'thetvdb': 269059, u'tvrage': 35857, u'imdb': u'tt2901828'}

{u'thetvdb': 273303, u'tvrage': 35560, u'imdb': u'tt3006666'}

{u'thetvdb': 260473, u'tvrage': 30918, u'imdb': u'tt2197994'}

{u'thetvdb': 83313, u'tvrage': None, u'imdb': u'tt1263594'}

{u'thetvdb': 80117, u'tvrage': 7218, u'imdb': u'tt0497079'}

{u'thetvdb': 174991, u'tvrage': 25843, u'imdb': u'tt1755893'}

{u'thetvdb': 71424, u'tvrage': None, u'imdb': u'tt0329824'}

{u'thetvdb': 258632, u'tvrage': 31545, u'imdb': u'tt2245937'}

{u'thetvdb': 259235, u'tvrage': None, u'imdb': u'tt2147632'}

{u'thetvdb': 297209, u'tvrage': 38100, u'imdb': u'tt3218114'}

{u'thetvdb': 185651, u'tvrage': None, u'imdb': u'tt1583417'}

{u'thetvdb': 250370, u'tvrage': 28934, u'imdb': u'tt1963853'}

{u'thetvdb': 129051, u'tvrage': None, u'imdb': u'tt1520150'}

{u'thetvdb': 76370, u'tvrage': None, u'imdb': u'tt0236907'}

{u'thetvdb': 316174, u'tvrage': None, u'imdb': u'tt5865052'}

{u'thetvdb': 82304, u'tvrage': 19011, u'imdb': u'tt1231448'}

{u'thetvdb': 289640, u'tvrage': 46963, u'imdb': u'tt4287478'}

{u'thetvdb': 249750, u'tvrage': None, u'imdb': u'tt1874006'}

{u'thetvdb': 250959, u'tvrage': 28442, u'imdb': u'tt2006560'}

{u'thetvdb': 281375, u'tvrage': 38313, u'imdb': u'tt3565412'}

{u'thetvdb': 274414, u'tvrage': None, u'imdb': u'tt3396736'}

{u'thetvdb': 271820, u'tvrage': None, u'imdb': u'tt0855313'}

{u'thetvdb': 250955, u'tvrage': None, u'imdb': u'tt2309561'}

{u'thetvdb': 273130, u'tvrage': 36774, u'imdb': u'tt3136814'}

{u'thetvdb': 84669, u'tvrage': 18525, u'imdb': u'tt1191056'}

{u'thetvdb': 74697, u'tvrage': 3348, u'imdb': u'tt0235917'}

{u'thetvdb': 76708, u'tvrage': None, u'imdb': u'tt0111892'}

{u'thetvdb': 266934, u'tvrage': None, u'imdb': u'tt2643770'}

{u'thetvdb': 79896, u'tvrage': None, u'imdb': u'tt0423657'}

{u'thetvdb': 303252, u'tvrage': None, u'imdb': u'tt5327970'}

{u'thetvdb': 256806, u'tvrage': None, u'imdb': u'tt2190731'}

{u'thetvdb': 78409, u'tvrage': None, u'imdb': u'tt0101041'}

{u'thetvdb': 274820, u'tvrage': None, u'imdb': u'tt3317020'}

{u'thetvdb': 296474, u'tvrage': 45813, u'imdb': u'tt4732076'}

{u'thetvdb': 285651, u'tvrage': 41593, u'imdb': u'tt3828162'}

{u'thetvdb': 315767, u'tvrage': None, u'imdb': u'tt5819414'}

{u'thetvdb': 287534, u'tvrage': 42884, u'imdb': u'tt4180738'}

{u'thetvdb': 76621, u'tvrage': None, u'imdb': u'tt0300802'}

{u'thetvdb': 280683, u'tvrage': 34278, u'imdb': u'tt2649738'}